Multithreading and other optimizations in Core

*Edit, after readings Waffle's much better explanation on the topic I have learned that my explanation is inaccurate, and this is in fact not multithreading even though it is multiple "threads". I recommend you read Waffles reply first, and disregard my explanations for most of this, but the numbers are accurate.

In this I am going to be covering Multithreading and its Pros and Cons for code in Core. I would also appreciate it if others add onto this with their own observations and methods that they have found.

It is best to note that these optimizations are intended for code with loops or nested loops containing lots of logic.

First, I will display the data and then the code for each of the things. This was benchmarked on a Ryzen 5 3600 with no background apps open using the os.clock() function for maximal accuracy.

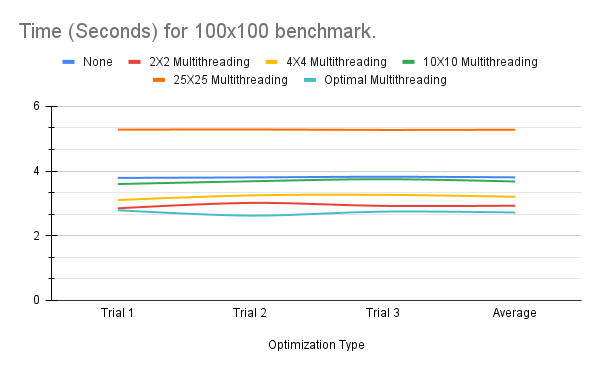

Benchmark one (100x100)

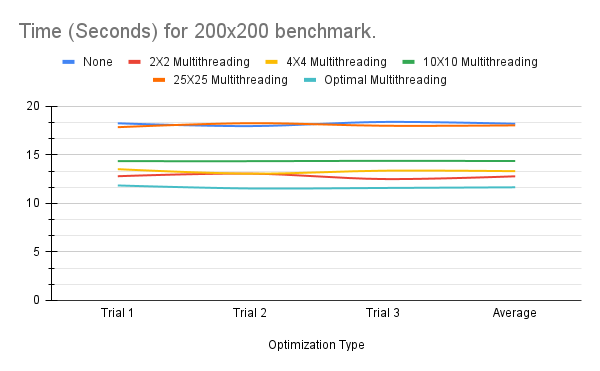

Benchmark two (200x200)

Important Note

Even though the Multithreading trials typically completed faster, it is important to note that the fps during the runtime of these was much lower than that of not multithreading.

Code for different optimizations

No optimization:

for x = 0, SizeX do

for y = 0, SizeY do

genTile(x, y)

end

Task.Wait() //To avoid instruction limit

end

Multithreading:

(xMultithreadingCount and yMultithreadingCount are either 2X2, 4X4, 10X10, or 25X25 depending on benchmark)

for xThread = 0, xMultithreadingCount - 1 do

Task.Spawn(function()

for x = xThread * SizeX/xMultithreadingCount, (xThread + 1) * SizeX/xMultithreadingCount do

Task.Spawn(function()

for yThread = 0, yMultithreadingCount - 1 do

Task.Spawn(function()

for y = yThread * SizeY/yMultithreadingCount, (yThread + 1) * SizeY/yMultithreadingCount do

genTile(x,y)

end

end)

end

end)

end

end)

end

Optimal Multithreading

for x = 0, SizeX do

Task.Spawn(function()

for y = 0, SizeY do

genTile(x,y)

end

end)

end

Some background info on what Task.Spawn() does and what Multithreading is.

Edit* I have removed my explanation, as Waffle has posted a much better and more accurate one in the replies.

What is going on here?

I honestly don't really know.

This is why I am asking for others to give their thoughts on why this might be happening, but will give my best theory below.

My best theory

*Please read Waffles reply in the Replies as his reply gives a more accurate explanation.

Why is Single Threaded slow?

As far as I know the greatest factor that is slowing down the single threaded code is the Instruction Limit which forces us to have the Task.Wait() which adds great delay.

Why is Multi Threaded slower with more threads?

My best guess for this is that after the point where you are no longer suffering from instruction limit, having more threads doesn't make it any faster, but actually makes it slower from the time it takes to Spawn them and the extra processing power of having more threads.

Why is the "Optimal Multithreading" so fast?

The Optimal Multithreading is fast in speed, but low in fps. Sure its a lot faster than no optimization, but during this time FPS is below 1. The reason it is so much faster is likely because of no Task.Wait()s because it won't go over instruction limit.

Conclusion

So, the conclusion of my observations is that Multithreading is faster* if used correctly. By faster I mean in terms of time taken, however, it does drop FPS by a huge amount. The best place to use it would most likely be in loading screens or such where FPS doesn't really matter, but time does. I still don't have a good explanation for why it is faster other than instruction limit, and spreading the workload out across multiple processes.

Full testing data:

100x100

| Trial | None | 2X2 Multithreading | 4X4 Multithreading | 10X10 Multithreading | 25X25 Multithreading | Optimal Multithreading |

|---|---|---|---|---|---|---|

| Trial 1 | 3.787 | 2.846 | 3.102 | 3.597 | 5.277 | 2.789 |

| Trial 2 | 3.802 | 3.014 | 3.246 | 3.682 | 5.283 | 2.622 |

| Trial 3 | 3.823 | 2.922 | 3.263 | 3.747 | 5.269 | 2.745 |

| Average | 3.804 | 2.927333333 | 3.203666667 | 3.675333333 | 5.276333333 | 2.718666667 |

200X200

| Trial | None | 2X2 Multithreading | 4X4 Multithreading | 10X10 Multithreading | 25X25 Multithreading | Optimal Multithreading |

|---|---|---|---|---|---|---|

| Trial 1 | 18.247 | 12.795 | 13.523 | 14.348 | 17.846 | 11.844 |

| Trial 2 | 17.959 | 13.063 | 13.06 | 14.345 | 18.257 | 11.535 |

| Trial 3 | 18.386 | 12.505 | 13.363 | 14.381 | 17.995 | 11.587 |

| Average | 18.19733333 | 12.78766667 | 13.31533333 | 14.358 | 18.03266667 | 11.65533333 |

If you have a better explanation, or more methods of optimization please leave a reply! I know my explanation is probably a bit off and most likely a bit hard to follow as I don't really have a very good idea of why this is happening the way it is.

Thanks for reading, I hope this helped you out, and be sure to check the replies! Have an awesome day and may your code be optimized!