Game Design & Science

An Optimization Experiment in Kingdom Fall

Hi everyone! I teamed up with CraterMan, the developer behind Kingdom Fall, to explore ways of improving the game, as well as to learn about player behavior in Core games. Kingdom Fall is an action RPG where you travel to multiple realms, slay monsters and improve your gear.

We noticed the frame rate in Kingdom Fall could be improved. We saw it as an opportunity to test some new profiling and analytics tools, to locate the problem and measure the impact of frame rate on player engagement. We conclude that a large portion of players were leaving the game immediately because of low frame rate, between 6.1% and 27.3%. The optimization discussed here increased session time by 11.7% and D1 retention by 0.34%.

Fun. We are here because we love games. Through this passion we have developed an intuitive understanding of what the word 'fun' means and what makes a game better. However, game design also has an analytical and methodic side that complements the artistic one. In this post I discuss profiling, frame rate, methodologies to measure player engagement, how to analyze those measurements and how to take action to improve your game based on those results.

The Problem

To test performance we used two PCs: An i7+2080 and an i5+1060.

For modern PC games the target frame rate should be 60 fps (frames per second) or better. Using Core's Play Mode Profiling (PMP) we observed that Kingdom Fall had a frame rate below 60 fps on both machines.

Play Mode Profiling tool in action. The offending script is highlighted in red.

PMP revealed that each frame had a CPU cost of about 22 ms (milliseconds) resulting in 45 fps (frames per second) on the i7+2080. To get smooth gameplay and stay above the desired 60 fps, each frame should cost no more than 16.7 ms.

22.2ms = 1 second / 45 fps

16.7ms = 1 second / 60 fps

The i7+2080 is a high-end PC and was running at only 45 fps. We can imagine the game was not playable for folks with low-end PCs, but we did not assume this to be true--we didn't know how many players belonged to such a category.

Shop items in Kingdom Fall have a client-side animation that rotates and moves them up/down.

Kingdom Fall has many different worlds connected by portals, each with several game areas. To focus the efforts, only the starting town area in world 1 was tested. This is the part of the game with the biggest impact on new players. Any performance gains in that area should be applicable to other areas.

Based on PMP data, we found a script consuming too much CPU. The purpose of the script is to animate shop items so they move up and down and look nice. With 75 shop items throughout world 1, the total cost of animating all items was an average 8.6 ms/frame. Out of a 16.7 ms budget that leaves only 8.1 ms for the rest of the game, which was not enough, resulting in the increase to 22 ms.

To keep consistent metrics while using PMP, run the game in Play Mode and do not move the character or camera. The player is set up to always spawn in the same location and orientation, after which you simply open PMP with the F4 key and look at the data. To test different areas of the game you can simply move the spawn point – no need to travel with the character.

The Fix

We first disabled the script completely, as a quick test, to estimate how much could be gained if it were optimized. To our surprise the game already ran at 60 fps on the i7+2080. This step also confirmed where we should fix the issue, so we don't spend time changing something unrelated.

We thought about potential solutions. The easiest fix would be to disable the shop animations altogether. It would save development time, but they add a nice touch to the game.

Another solution, that is not a quality trade-off, is to stop animating shop items when they are outside of the player's view. In cases where they are still in-view, but are very far away, it's also unnecessary to animate. We can, in fact, completely disable the objects if they are out of view, such as when they are behind the camera.

To determine if a shop item is within the player's view we use a dot-product operation, using the camera's forward vector, the position of the shop item and the player's position.

local v = shopItemPosition - playerPosition

local dot = v .. cameraDirection

if dot > 0 then

-- Item is probably in view

end

This algorithm catches all items in view, but also sometimes catches items that are not in view. There are more accurate methods, but this one is computationally cheap and proves sufficient to solve the problem. There is no need to improve it at the moment.

Edit: For reference, I have published a community content piece that uses this optimization. It's called Resource Pickup Optimization. It comes with a script called SharedBobRotate that uses this algorithm.

A/B Test

We had a fix to the problem, and now wanted to measure its impact. To do this we used an A/B test.

An A/B test is an experiment where two similar versions of a program are shown to users. Each user will either see program A or B, at random, and they will consistently see the version assigned to them, even if they leave and come back later. Data is tracked on how the users interact with the product and then statistical analysis determines which version of the product is best.

In this case, we know the optimized version is best. However, the goal is also to understand the impact of low performance on player behavior. This will help us make future decisions on where to spend our time without falling into personal bias: If it runs at 60fps on my PC, that doesn't mean it's good enough yet for the players. We only know what we measure.

To understand the impact of a change you can look at general metrics instead of employing an A/B test. For example, if you look at D1 retention each day before and after the change, you might see a difference. That said, you may have introduced other changes to the game at the same time, and those will impact the data. External factors such as the launch of a movie or a holiday can also impact day-to-day data. An A/B test solves those problems and allows you to make other changes as needed, even while the experiment is still running. As long as both A and B players get the same changes, it's ok.

Available in Community Content. See the Readme for usage instructions. DM me for help.

To conduct the test we used the Funnel Analytics tool from Community Content. While we were busy investigating the performance issues and figuring out a solution, we enabled the Funnel tool in Kingdom Fall, so it would collect preliminary data the week prior to the A/B test.

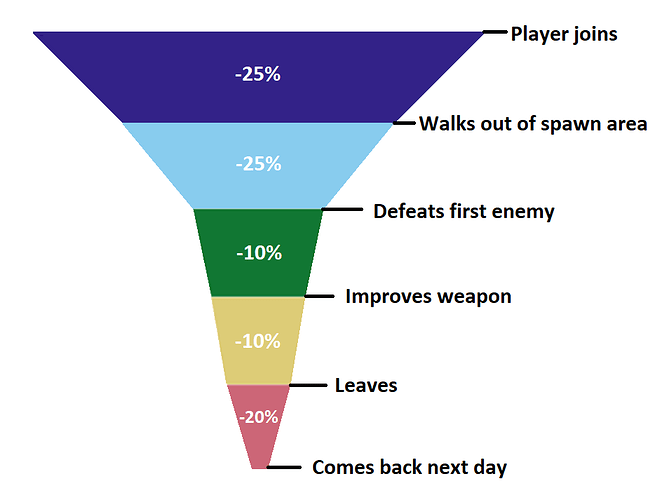

A funnel helps you identify where players leave the game and don't come back. It's a set of data formed by a series of steps that players commonly go through. For example, players move from location 1 to 2, or gain experience levels 2, 3, 4, etc. When a player leaves and doesn't come back, they no longer trigger any steps, resulting in aggregate data where each step has less players than the step before.

A hypothetical funnel of new players, showing how many are lost at each step.

The optimization to shop items takes aim at the very first step of the funnel, where we saw an immediate loss of 20% of players as soon as they joined.

To implement the experiment we adjusted the animation script to branch into two code paths. The first is the original code that animates all shop items all the time, while the second one is the optimized version. To determine if a player belongs to group A or B, and thus which code path to use, the animation script asks the Funnel Analytics tool from CC.

The script calls the following:

local PLAYER = Game.GetLocalPlayer()

while not _G.Funnel or not _G.Funnel.IsPlayerInTestGroup do

Task.Wait()

end

if _G.Funnel.IsPlayerInTestGroup(PLAYER, 1) then

-- Code path A (original)

else

-- Code path B (optimization)

end

Results

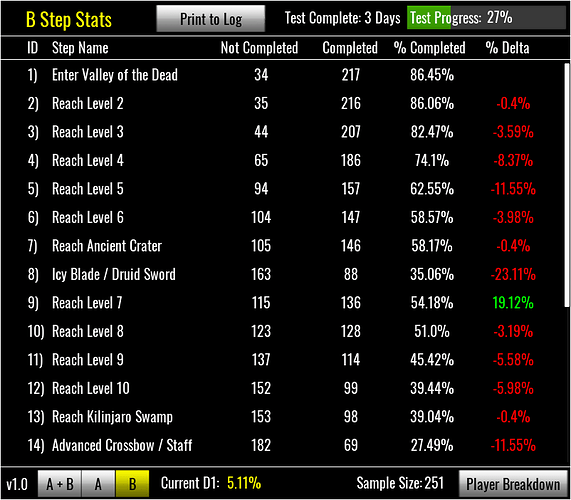

The Funnel tool automatically split the new players into the two groups at random, resulting in 249 players in group A and 251 in group B. We extracted the data and moved it into a spreadsheet, for analysis.

-

Session time - This value measures how many seconds a player was in the game, between joining and leaving. To compare session time, we took the average session time of all players from group A and compared it with the average session time from group B. We saw an average increase of 86 seconds in session time (+11.7%) for players who received the optimization.

-

Top of the funnel - We set up the first step in the funnel to trigger when players leave the starting town and enter the game's second zone, Valley of the Dead. We saw an increase of 6.1% for group B. Those who got the optimization are more likely to begin playing. As all funnels go, improvements in the first step trickle down. Steps 2 and 3 happen very quickly in the game, in less than a minute of play, and saw improvements of 11.4% and 9.8%, respectively. As the optimization was the only difference between groups A and B, we can infer that about 27.3% of players (6.1 + 11.4 + 9.8) were leaving because of low performance, either immediately or shortly after engaging with the game.

-

D1 - The D1 metric stands for the % of new players who come back the next day to play again. This is ultimately what we are trying to improve about the game, as it reflects the enjoyment players are having. In the experiment we see an increase in D1 between group A and B of 0.34%. That said, the margin of error in this metric is 0.89%. A sample of 500 players divided by two does not give us a significant enough delta to draw a conclusion about D1. If we look further down in the funnel, we see other steps with large player drop-offs, where the impact to D1 may be greater.

A final thing to consider in the results is that we could have accidentally made a mistake in code-path A, somehow making it worse than before. Human error does happen! To account for this, we compared usage data from group A with data prior to the optimization experiment. Indeed, we find a small gap, where the preliminary data seems better than group A. However, we learned that this is a mistake in the original data (not in the group A data), where players who used the Rebirth mechanic were being counted as if they were new players. The small number of Rebirths makes the preliminary data look slightly better than reality. This Rebirth mistake has been fixed for the optimization A/B experiment. Also, group B results look much better than the preliminary data, so we are satisfied that the optimization does, in fact, make the game better.

Funnel Analytics tool, showing data for group B in Kingdom Fall.

Future Work

There is still a 13.6% loss of players on step 1 of the funnel. That is, players who never even start playing. This suggests either: 1. More optimizations are needed. Or 2. The game's design needs to be adjusted at the very beginning, to motivate players to get started. It will never be 100%, but it can be better. A 5% to 10% loss of players in step 1 would be normal for any game.

The optimization change presented herein was a CPU optimization. We chose this problem because it was the largest cost, as seen in the PMP tool. After this change the game is now GPU-bound, which means the GPU is the bottleneck and further CPU improvements will not lead to much better frame rate. We need to investigate what is causing the high GPU demand and see if it's possible to optimize--with or without loss in game quality.

We have also identified some gameplay that uses too many networked objects, more than necessary. Reduction in networked objects is expected to lower the time it takes players to load into the game, as well as reduce the server load. This will likely have a positive result on the first funnel step.

These changes take aim at the very top of the user experience--the moment they join and decide if they want to play or not. We should also look "down the funnel", identifying points where there are large drop-off in players. The largest drop is usually where the effort should be focused, and why tracking the data is important.

Furthermore, as the onboarding of new players improves, it's worth avoiding diminishing returns. We could apply this methodology to later parts of the game, to understand how dedicated players interact with the systems and look for ways to improve their long-term engagement, beyond D1.

Finally, we aim to apply learnings from this experiment to other games, but also to improve the tools and the workflow.

Data from this experiment: